2025 Keynotes

Keynote 1: KIOXIA: Optimize AI Infrastructure Investments with Flash Memory Technology and Storage Solutions

Tuesday, August 5th @ 11:00 AM - 11:30 AM

Neville Ichhaporia is Senior Vice President and General Manager of the SSD Business Unit at KIOXIA America, Inc., responsible for SSD marketing, business management, product planning, and engineering, overseeing the SSD product portfolio aimed at cloud, data center, enterprise, and client computing markets. Neville has over 20 years of extensive industry experience, including product management, strategic marketing, new product development, hardware engineering, and R&D. Prior to joining KIOXIA in 2016, Neville held diverse roles at Toshiba Memory America, SanDisk, Western Digital Corporation, and Microchip. Neville Ichhaporia holds an MBA from the Santa Clara University Leavey School of Business, an MS in Electrical Engineering and VLSI Design from the University of Ohio, Toledo, and a BS in Instrumentation and Control Systems from the University of Mumbai.

Neville Ichhaporia is Senior Vice President and General Manager of the SSD Business Unit at KIOXIA America, Inc., responsible for SSD marketing, business management, product planning, and engineering, overseeing the SSD product portfolio aimed at cloud, data center, enterprise, and client computing markets. Neville has over 20 years of extensive industry experience, including product management, strategic marketing, new product development, hardware engineering, and R&D. Prior to joining KIOXIA in 2016, Neville held diverse roles at Toshiba Memory America, SanDisk, Western Digital Corporation, and Microchip. Neville Ichhaporia holds an MBA from the Santa Clara University Leavey School of Business, an MS in Electrical Engineering and VLSI Design from the University of Ohio, Toledo, and a BS in Instrumentation and Control Systems from the University of Mumbai.

Mr. Katsuki Matsudera is a General Manager of the Memory Technical Marketing Managing Department at KIOXIA Corporation. He has played a key role of the planning and marketing of KIOXIA’s groundbreaking BiCS FLASH™ Generation 8 3D flash memory, utilizing CBA (CMOS directly Bonded to Array) architecture and taking flash memory to the next level. Mr. Matsudera graduated with a master’s degree in Mechanical Engineering from Kyoto University in 1996, joining Toshiba Corporation the same year. Later he worked on groundbreaking products, including the world's first NAND flash memory using TSV technology and 3D flash memory with 4-bit-per-cell technology, referred to as BiCS FLASH™ QLC. Today, Katsuki directs the company’s comprehensive product strategy planning and execution, as well as new market development of future BiCS FLASH™ generations.

Mr. Katsuki Matsudera is a General Manager of the Memory Technical Marketing Managing Department at KIOXIA Corporation. He has played a key role of the planning and marketing of KIOXIA’s groundbreaking BiCS FLASH™ Generation 8 3D flash memory, utilizing CBA (CMOS directly Bonded to Array) architecture and taking flash memory to the next level. Mr. Matsudera graduated with a master’s degree in Mechanical Engineering from Kyoto University in 1996, joining Toshiba Corporation the same year. Later he worked on groundbreaking products, including the world's first NAND flash memory using TSV technology and 3D flash memory with 4-bit-per-cell technology, referred to as BiCS FLASH™ QLC. Today, Katsuki directs the company’s comprehensive product strategy planning and execution, as well as new market development of future BiCS FLASH™ generations.

For over three decades, KIOXIA, the inventor of NAND flash memory technology, has continued to innovate and lead the future of memory and storage. With the introduction of its upcoming BiCS FLASH™ Generation 10 3D flash memory, along with a diverse portfolio of SSDs, KIOXIA is meeting the ever-increasing demand for faster, denser, and more power-efficient storage for Artificial Intelligence. AI is now dominating investments in data center infrastructure; however, a “one-size-fits-all” storage solution will neither optimize these investments nor maximize ROI. There is no single, homogenous AI workload; therefore, each stage of the AI data lifecycle has its own unique storage requirements which needs to be matched with the right storage solution to optimize AI investments. Discover how KIOXIA’s next-generation of memory and storage solutions can Scale AI Without Limits – Make it with KIOXIA!

Keynote 2: FADU: Pushing the Storage Frontier: Next-Generation SSDs for Tomorrow’s Datacenters

Tuesday, August 5th @ 11:40 AM - 12:10 PM

Jihyo Lee is the CEO and co-founder of FADU, a leading fabless semiconductor company revolutionizing data center and storage solutions for next-generation computing architectures. Under his leadership, FADU has become a hub of innovation, bringing together top industry talent to drive advancements in technology. Before founding FADU, Jihyo was a partner at Bain & Company, where he honed his strategic expertise. He is also a successful serial entrepreneur, having built and led multiple ventures across the technology, telecom, and energy sectors. Jihyo holds an MBA from the Wharton School of the University of Pennsylvania and both bachelor’s and master’s degrees in Industrial Engineering from Seoul National University. His unique combination of academic excellence, entrepreneurial spirit, and leadership experience makes him a visionary in the tech industry.

Jihyo Lee is the CEO and co-founder of FADU, a leading fabless semiconductor company revolutionizing data center and storage solutions for next-generation computing architectures. Under his leadership, FADU has become a hub of innovation, bringing together top industry talent to drive advancements in technology. Before founding FADU, Jihyo was a partner at Bain & Company, where he honed his strategic expertise. He is also a successful serial entrepreneur, having built and led multiple ventures across the technology, telecom, and energy sectors. Jihyo holds an MBA from the Wharton School of the University of Pennsylvania and both bachelor’s and master’s degrees in Industrial Engineering from Seoul National University. His unique combination of academic excellence, entrepreneurial spirit, and leadership experience makes him a visionary in the tech industry.

Ross Stenfort is a Hardware Systems Engineer at Meta delivering scalable storage solutions. He has 30+ years of experience developing and bringing leading edge storage products to market. Ross works closely industry partners and standards organizations including NVM Express, SNIA/EDSFF, and Open Compute Project (OCP). With experience including ASIC design, he has an appreciation for the design challenges facing SSD providers to deliver performance and QoS within a shrinking power envelope. Ross holds over 40 patents.

Ross Stenfort is a Hardware Systems Engineer at Meta delivering scalable storage solutions. He has 30+ years of experience developing and bringing leading edge storage products to market. Ross works closely industry partners and standards organizations including NVM Express, SNIA/EDSFF, and Open Compute Project (OCP). With experience including ASIC design, he has an appreciation for the design challenges facing SSD providers to deliver performance and QoS within a shrinking power envelope. Ross holds over 40 patents.

The rapid evolution of datacenter infrastructure is being driven by the need for higher performance, ultra-high capacity, and power efficiency. This talk explores how evolving new AI workloads are driving storage and will delve into the challenges and opportunities associated with these workloads. This keynote will also provide a comprehensive overview of where the industry stands today and the opportunities that lie ahead. We will share insights from both the customer perspective and the supplier perspective. Additionally, we will discuss the importance of ecosystem collaboration and introduce new business models. Join us as we explore the new storage frontier and chart the course for the next generation of datacenter infrastructure.

Keynote 3: Micron: Data is at the Heart of AI

Tuesday, August 5th @ 01:10 PM - 01:40 PM

Jeremy is an accomplished storage technology leader with over 20 years of experience. At Micron he has a wide range of responsibilities, including product planning, marketing and customer support for Server, Storage, Hyperscale, and Client markets globally. Previously he was GM of the SSD business at KIOXIA America and spent a decade in sales and marketing roles at startup companies MetaRAM, Tidal Systems, and SandForce. Jeremy earned a B.S.E.E. from Cornell University, is a Stanford Graduate Business School alumni, and holds over 25 patents or patents pending

Jeremy is an accomplished storage technology leader with over 20 years of experience. At Micron he has a wide range of responsibilities, including product planning, marketing and customer support for Server, Storage, Hyperscale, and Client markets globally. Previously he was GM of the SSD business at KIOXIA America and spent a decade in sales and marketing roles at startup companies MetaRAM, Tidal Systems, and SandForce. Jeremy earned a B.S.E.E. from Cornell University, is a Stanford Graduate Business School alumni, and holds over 25 patents or patents pending

Without data, there is no AI. To unlock AI’s full potential, data must be stored, moved, and processed with incredible speed and efficiency from the cloud to the edge. As AI substantially increases performance requirements, the need for optimized power/cooling, rack space, and capacity also rises. This session explores how Micron’s cutting-edge memory and storage solutions – such as PCIe Gen6 SSDs, high-capacity SSDs, HBM3E, and SOCAMM – are driving the AI revolution, reducing bottlenecks, optimizing energy efficiency, and turning data into intelligence. We will explore Micron’s end-to-end, high-performance, energy-efficient memory and storage solutions powering the AI revolution by turning data into intelligence. Join us as we look at how Micron's memory and storage innovations fuel the AI revolution to enrich life for all.

Keynote 4: Silicon Motion: Smart Storage in Motion: From Silicon Innovation to AI Transformation Across all Spectrums

Tuesday, August 5th @ 01:40 PM - 02:10 PM

Alex Chou joined us in December 2023 with over 30 years’ industry experience in ASIC design/applications engineering, product marketing, business strategy, and executive-level business engagement. Prior to Silicon Motion, Alex was Senior Vice President at Synaptics, where he was the GM responsible for the growth and success of its Wireless Connectivity business. Prior to that, he spent more than 18 years at Broadcom, and was responsible for their enterprise networking, WiFi network solutions and client WIFI/BT/GNSS as VP of Product Marketing at Broadcom's Wireless Connectivity BU. Alex holds an BS degree from National Cheng Kung University in Taiwan, and an MS in Computer Engineering from Syracuse University in New York.

Alex Chou joined us in December 2023 with over 30 years’ industry experience in ASIC design/applications engineering, product marketing, business strategy, and executive-level business engagement. Prior to Silicon Motion, Alex was Senior Vice President at Synaptics, where he was the GM responsible for the growth and success of its Wireless Connectivity business. Prior to that, he spent more than 18 years at Broadcom, and was responsible for their enterprise networking, WiFi network solutions and client WIFI/BT/GNSS as VP of Product Marketing at Broadcom's Wireless Connectivity BU. Alex holds an BS degree from National Cheng Kung University in Taiwan, and an MS in Computer Engineering from Syracuse University in New York.

Nelson Duann has been with Silicon Motion since 2007 and has nearly 25 years of experience in product design, development, and marketing in the semiconductor industry. He was most recently leading Silicon Motion's marketing and R&D efforts and has played a key role in leading the company's OEM business for mobile storage and SSD controller solutions, helping to introduce these products to growing them into the market leaders in these markets today. Prior to Silicon Motion, he worked for Sun Microsystems focusing on UltraSPARC microprocessor projects. Nelson has an MS in Communications Engineering from National Chiao Tung University in Taiwan and an MS in Electrical Engineering from Stanford University.

Nelson Duann has been with Silicon Motion since 2007 and has nearly 25 years of experience in product design, development, and marketing in the semiconductor industry. He was most recently leading Silicon Motion's marketing and R&D efforts and has played a key role in leading the company's OEM business for mobile storage and SSD controller solutions, helping to introduce these products to growing them into the market leaders in these markets today. Prior to Silicon Motion, he worked for Sun Microsystems focusing on UltraSPARC microprocessor projects. Nelson has an MS in Communications Engineering from National Chiao Tung University in Taiwan and an MS in Electrical Engineering from Stanford University.

AI is transforming every layer of computing. However, without seamless data movement and intelligent orchestration, its full potential cannot be realized. As data moves from hyperscale cloud training platforms to low-latency edge inference engines, storage is no longer a static endpoint. It has become the critical infrastructure that keeps AI in motion. In this keynote, we will explore how next-generation storage solutions are driving the AI revolution by enabling high-throughput data transfer, ultra-low latency, and intelligent workload orchestration across the entire data pipeline—from cloud to edge. We will highlight innovations in flash storage architecture, interface performance, and AI-optimized data paths that overcome infrastructure bottlenecks and deliver greater speed, scalability, and efficiency. From the data center to edge devices, from data to intelligence, Silicon Motion is unlocking the full power of data across the AI.

Keynote 5: SK hynix: Where AI Begins: Full-Stack Memory Redefining the Future

Tuesday, August 5th @ 02:10 PM - 02:40 PM

Jeff (Joonyong) Choi is Vice President and Head of the HBM Business Planning Group at SK hynix, where he leads portfolio planning and strategic direction for next-generation High Bandwidth Memory (HBM) products. In this role, he drives the successful enablement of advanced memory solutions and leads high-impact business engagements with key players across the global AI ecosystem. Jeff plays a pivotal role in orchestrating product development, customer collaboration, and business strategy into a seamless, integrated process—helping SK hynix position itself at the forefront of innovation in AI and high-performance computing. Prior to his current role, he led product planning for mobile and graphics DRAM, contributing significantly to SK hynix’s market leadership in performance memory. Jeff holds a Master’s degree in Electrical Engineering from KAIST and an MBA from the MIT Sloan School of Management.

Jeff (Joonyong) Choi is Vice President and Head of the HBM Business Planning Group at SK hynix, where he leads portfolio planning and strategic direction for next-generation High Bandwidth Memory (HBM) products. In this role, he drives the successful enablement of advanced memory solutions and leads high-impact business engagements with key players across the global AI ecosystem. Jeff plays a pivotal role in orchestrating product development, customer collaboration, and business strategy into a seamless, integrated process—helping SK hynix position itself at the forefront of innovation in AI and high-performance computing. Prior to his current role, he led product planning for mobile and graphics DRAM, contributing significantly to SK hynix’s market leadership in performance memory. Jeff holds a Master’s degree in Electrical Engineering from KAIST and an MBA from the MIT Sloan School of Management.

Chunsung Kim, VP of eSSD Product Development at SK hynix, currently leads the development of the company’s enterprise SSD products. With over 15 years of experience in S.LSI development, Chunsung Kim established and built SK hynix’s in-house NAND Flash controller teams and capabilities in 2011.

Possessing a well-rounded expertise in both SSD engineering and business strategy, he has also played a pivotal role in stabilizing SK hynix’s global R&D operations and management. In addition, he is spearheading the company’s initiatives to explore the future of storage solutions in the AI era.

Chunsung Kim holds a Master’s degree in Control and Instrumentation Engineering from Chung-Ang University in South Korea.

Chunsung Kim, VP of eSSD Product Development at SK hynix, currently leads the development of the company’s enterprise SSD products. With over 15 years of experience in S.LSI development, Chunsung Kim established and built SK hynix’s in-house NAND Flash controller teams and capabilities in 2011.

Possessing a well-rounded expertise in both SSD engineering and business strategy, he has also played a pivotal role in stabilizing SK hynix’s global R&D operations and management. In addition, he is spearheading the company’s initiatives to explore the future of storage solutions in the AI era.

Chunsung Kim holds a Master’s degree in Control and Instrumentation Engineering from Chung-Ang University in South Korea.

As the AI industry is rapidly shifting its focus from AI Training to AI Inference, memory technologies must evolve to support high-performance and power-efficient token generation across Generative, Agent and Physical AI. Performance and power efficiency remain two critical pillars shaping the scalability and TCO of AI systems. To address these demands, SK hynix delivers a comprehensive memory portfolio-spanning HBM, DRAM, compute SSDs, and storage SSDs optimized for diverse AI environments including data centers, PCs, and smartphones. HBM, with its structural advantages of high bandwidth and low power consumption, provides the flexibility to meet a wide variety of customer needs. Meanwhile, our storage solutions are designed to enable fast, reliable access to data-intensive workloads in AI inference scenarios. Together, these efforts form a mid-to-long-term roadmap focused on scalability, performance, and cost optimization. This keynote will highlight how SK hynix’s memory technologies are enabling the infrastructure required for next-generation AI.

Keynote 6: Samsung: Architecting AI Advancement: The Future of Memory and Storage

Tuesday, August 5th @ 02:40 PM - 03:10 PM

Hwa-Seok Oh leads the Controller Development team at Samsung, where he oversees the commercialization of flash storage products that include mobile memory devices like eMMC and UFS, as well as client-, server-, and enterprise-class SSDs. Hwa-Seok Oh joined Samsung Electronics in 1997 as a SoC design engineer, focusing on the development of network and storage controllers. While working on flash storage products, he pioneered the world’s first UFS products. He also spearheaded the creation of high-performance NVMe SSD controllers for datacenters. More recently, he has been at the forefront of developing new flash storage technologies, contributing to innovations such as Samsung’s SmartSSD and Flash Memory-based CXL Memory Module. Hwa-Seok Oh holds a Bachelor’s and a Master’s degree in Computer Science from Sogang University, earned in 1995 and 1997, respectively.

Hwa-Seok Oh leads the Controller Development team at Samsung, where he oversees the commercialization of flash storage products that include mobile memory devices like eMMC and UFS, as well as client-, server-, and enterprise-class SSDs. Hwa-Seok Oh joined Samsung Electronics in 1997 as a SoC design engineer, focusing on the development of network and storage controllers. While working on flash storage products, he pioneered the world’s first UFS products. He also spearheaded the creation of high-performance NVMe SSD controllers for datacenters. More recently, he has been at the forefront of developing new flash storage technologies, contributing to innovations such as Samsung’s SmartSSD and Flash Memory-based CXL Memory Module. Hwa-Seok Oh holds a Bachelor’s and a Master’s degree in Computer Science from Sogang University, earned in 1995 and 1997, respectively.

Daihyun Lim joined Samsung in 2023 and serves as Vice President of Memory for Samsung Semiconductor, leading HBM I/O design as a Master (VP of Technology). Prior to Samsung, he was with IBM ASIC group working on high-speed serial link design from 2008 to 2017. He was also with Nokia Network Infrastructure, designing silicon photonics transceivers as a Distinguished Member of Technical Staff (DMTS) from 2017 to 2022. His areas of expertise include high-speed memory interface circuits, signal and power integrity, and optical transceivers. Daihyun Lim received his B.S. in Electrical Engineering from Seoul National University in 1999, and his S.M. and Ph.D. degrees in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology (MIT) in 2004 and 2008, respectively. In 2017, he was recognized as the author of the most frequently cited paper in the history of VLSI Symposium.

Daihyun Lim joined Samsung in 2023 and serves as Vice President of Memory for Samsung Semiconductor, leading HBM I/O design as a Master (VP of Technology). Prior to Samsung, he was with IBM ASIC group working on high-speed serial link design from 2008 to 2017. He was also with Nokia Network Infrastructure, designing silicon photonics transceivers as a Distinguished Member of Technical Staff (DMTS) from 2017 to 2022. His areas of expertise include high-speed memory interface circuits, signal and power integrity, and optical transceivers. Daihyun Lim received his B.S. in Electrical Engineering from Seoul National University in 1999, and his S.M. and Ph.D. degrees in Electrical Engineering and Computer Science from the Massachusetts Institute of Technology (MIT) in 2004 and 2008, respectively. In 2017, he was recognized as the author of the most frequently cited paper in the history of VLSI Symposium.

As AI workloads grow more complex, memory and storage architectures must evolve to deliver ultra- high bandwidth, low latency, and efficient scalability. Samsung’s latest innovations—HBM, DDR5, CXL, PCIe Gen5/Gen6 SSDs, and UFS 5.0—are engineered to meet these demands across data center and edge environments. By refining memory hierarchies and enhancing data throughput and integrity, Samsung is advancing infrastructure that has compelling value to next-gen AI systems. This session aims to highlight the pivotal role of memory and storage within the AI infrastructure framework, while providing an insightful forecast into forthcoming technological advancements and industry outlook.

Keynote 7: NEO Semiconductor: Breaking the Bottleneck: Disruptive 3D Memory Architecture for AI

Wednesday, August 6th @ 11:00 AM - 11:30 AM

Andy Hsu is the Founder and CEO of NEO Semiconductor. He has over 3 decades of experience in the semiconductor industry including positions as VP of Engineering and leader of R&D and Engineering Teams. This resulted in the development of more than 60 products in various non-volatile memories, DRAM, and AI chips. Andy is an accomplished technology visionary and inventor of 125 granted U.S. patents. He performed research in the fields of Neural Networks and Artificial Intelligence (AI) while earning a master’s degree in Electrical, Computer, and System Engineering (ECSE) from Rensselaer Polytechnic Institute (RPI) in New York. He earned a bachelor’s degree from the National Cheng-Kung University in Taiwan.

Andy Hsu is the Founder and CEO of NEO Semiconductor. He has over 3 decades of experience in the semiconductor industry including positions as VP of Engineering and leader of R&D and Engineering Teams. This resulted in the development of more than 60 products in various non-volatile memories, DRAM, and AI chips. Andy is an accomplished technology visionary and inventor of 125 granted U.S. patents. He performed research in the fields of Neural Networks and Artificial Intelligence (AI) while earning a master’s degree in Electrical, Computer, and System Engineering (ECSE) from Rensselaer Polytechnic Institute (RPI) in New York. He earned a bachelor’s degree from the National Cheng-Kung University in Taiwan.

As AI systems scale, the widening gap between processor and memory performance has become a critical limitation. NEO Semiconductor introduces a breakthrough in 3D memory architecture that eliminates the need for through-silicon via (TSV) processes—dramatically increasing memory bandwidth by up to 10x, while reducing die cost, height, and power consumption by as much as 90%. In this keynote, NEO will explore how rethinking memory from the ground up can unlock new levels of performance and efficiency in AI. Attendees will also get an exclusive preview of a soon-to-be-announced innovation that promises to redefine the future of memory technology.

Keynote 8: Sandisk: The Diversification of Flash Storage - Unlocking the Full Potential of NAND in the AI Era

Wednesday, August 6th @ 11:40 AM - 12:10 PM

Alper Ilkbahar is Executive Vice President of Memory Technology at Sandisk, overseeing NAND technology development, next-generation technologies, corporate research functions, and market development. Most recently, Alper served as Senior Vice President of Global Strategy and Technology at Western Digital. Prior to Western Digital, he was the Vice President of the Data Center Group and General Manager of the Intel Optane Group. In this role, he supported the development of memory and storage solutions by integrating innovative hardware and software for next-generation data centers around Optane Technology.

Alper served as Vice President and General Manager of several business units at Sandisk. He started his career in Intel’s microprocessor division, where he worked in design engineering and management. A leader who has honed his technical expertise, Alper holds more than 50 patents in the fields of semiconductor process, device, design, and testing.

Alper received a bachelor’s in electrical engineering from Boğaziçi University in Istanbul, Turkey, a Master’s in Electrical Engineering from the University of Michigan, and a Master of Business Administration from the Wharton School of the University of Pennsylvania.

Alper Ilkbahar is Executive Vice President of Memory Technology at Sandisk, overseeing NAND technology development, next-generation technologies, corporate research functions, and market development. Most recently, Alper served as Senior Vice President of Global Strategy and Technology at Western Digital. Prior to Western Digital, he was the Vice President of the Data Center Group and General Manager of the Intel Optane Group. In this role, he supported the development of memory and storage solutions by integrating innovative hardware and software for next-generation data centers around Optane Technology.

Alper served as Vice President and General Manager of several business units at Sandisk. He started his career in Intel’s microprocessor division, where he worked in design engineering and management. A leader who has honed his technical expertise, Alper holds more than 50 patents in the fields of semiconductor process, device, design, and testing.

Alper received a bachelor’s in electrical engineering from Boğaziçi University in Istanbul, Turkey, a Master’s in Electrical Engineering from the University of Michigan, and a Master of Business Administration from the Wharton School of the University of Pennsylvania.

Jim Elliott is Executive Vice President and Chief Revenue Officer at Sandisk. In this role, Jim leads efforts to maximize revenue opportunities and drive Sandisk’s growth. A highly respected industry leader with nearly 30 years of experience in the semiconductor memory and storage sector, Jim has held leadership roles in both global corporations and Silicon Valley start-ups. Known as a market visionary, he has played a key role in driving memory and storage transitions and market evolution. He has proven expertise in developing synergistic product portfolios across a wide range of markets including Server, Data Center, PC, Tablet, Phone, Wearables, and Automotive. Prior to Sandisk, Jim served as Executive Vice President at Samsung Semiconductor, Inc., where he led all Sales and Marketing activities for Samsung’s memory organization in the Americas. There, he was responsible for the sales, marketing, operations, and QA teams for Samsung’s memory business, comprised of 3D NAND, SSDs, DRAM, LPDDR, Graphics, HBM and UFS products. Jim holds a Bachelor of Arts from the University of California at Davis, and a Master of Business Administration from Cal Poly University, San Luis Obispo

Jim Elliott is Executive Vice President and Chief Revenue Officer at Sandisk. In this role, Jim leads efforts to maximize revenue opportunities and drive Sandisk’s growth. A highly respected industry leader with nearly 30 years of experience in the semiconductor memory and storage sector, Jim has held leadership roles in both global corporations and Silicon Valley start-ups. Known as a market visionary, he has played a key role in driving memory and storage transitions and market evolution. He has proven expertise in developing synergistic product portfolios across a wide range of markets including Server, Data Center, PC, Tablet, Phone, Wearables, and Automotive. Prior to Sandisk, Jim served as Executive Vice President at Samsung Semiconductor, Inc., where he led all Sales and Marketing activities for Samsung’s memory organization in the Americas. There, he was responsible for the sales, marketing, operations, and QA teams for Samsung’s memory business, comprised of 3D NAND, SSDs, DRAM, LPDDR, Graphics, HBM and UFS products. Jim holds a Bachelor of Arts from the University of California at Davis, and a Master of Business Administration from Cal Poly University, San Luis Obispo

Mrinal Kochar is a visionary leader with over 21 years of experience in the SSD, Flash, and DRAM industry, driving innovation, product differentiation and industry standards. As Corporate Vice President at Sandisk, he leads the SSD engineering organization, spearheading next-generation Flash storage solutions that push the boundaries of speed, efficiency, and reliability. A prolific inventor with 20+ patents, Mrinal has played a pivotal role in advancing memory technology, shaping technology roadmaps, and aligning product strategies with business goals. With a track record of scaling engineering organizations and fostering a culture of collaboration and excellence, he continues to inspire teams and industry partnerships. A recognized thought leader, Mrinal has delivered keynotes at esteemed global conferences, shaping the future of SSD technology and driving sustained business growth in a multi-billion market. He firmly believes that technology is only as powerful as the people behind it and remains committed to mentoring and developing the next generation of engineers and leaders. Mrinal holds BS and MS degrees in Electrical Engineering from the University of Idaho.

Mrinal Kochar is a visionary leader with over 21 years of experience in the SSD, Flash, and DRAM industry, driving innovation, product differentiation and industry standards. As Corporate Vice President at Sandisk, he leads the SSD engineering organization, spearheading next-generation Flash storage solutions that push the boundaries of speed, efficiency, and reliability. A prolific inventor with 20+ patents, Mrinal has played a pivotal role in advancing memory technology, shaping technology roadmaps, and aligning product strategies with business goals. With a track record of scaling engineering organizations and fostering a culture of collaboration and excellence, he continues to inspire teams and industry partnerships. A recognized thought leader, Mrinal has delivered keynotes at esteemed global conferences, shaping the future of SSD technology and driving sustained business growth in a multi-billion market. He firmly believes that technology is only as powerful as the people behind it and remains committed to mentoring and developing the next generation of engineers and leaders. Mrinal holds BS and MS degrees in Electrical Engineering from the University of Idaho.

We are witnessing a fundamental shift where flash moves from being a cost-optimized component to a specialized and diverse technology based on evolving workload and business requirements. The storage itself becomes more customizable to match more complex workloads. Sandisk believes that the unique dimensional capabilities of NAND present an unparalleled opportunity to drive innovation within the evolving AI landscape. Join us as we showcase NAND-based solutions that will strategically elevate its value to the ecosystem, delivering tangible benefits for the most challenging data-intensive workloads.

Keynote 9: MaxLinear: “Accelerated” Software-Defined Storage Transforming Data Storage at Enterprise Data Centers

Wednesday, August 6th @ 01:10 PM - 01:40 PM

Vikas Choudhary joined MaxLinear in 2024, responsible for the business strategy and product development for the company’s Connectivity & Storage business. In this role, he will be driving business and product strategies that enable next generation of ethernet (copper) and storage products in data centers, cloud networks, and enterprise storage. His 30+ year career in the semiconductor industry began as an analog/mixed-signal design engineer at ST Microelectronics. From there, he had continued success with companies such as Silicon Systems/Texas Instruments, PMC-Sierra/Microsemi and Analog Devices (ADI). Most recently, Vikas served as Vice President of Marketing, Sales, and Systems at Murata-pSemi, overseeing growth across consumer mobile, connectivity, wireless infrastructure, and high-performance multi-market components. Vikas received his MBA in Marketing & Strategy from Northwestern University, M.S. in Electrical Engineering (MSEE) from UCLA Technology, and BE from the Birla Institute of Technology (BITS) in Ranchi, India. Vikas is also the author of 5 patents, multiple publications, and editor of a book on fundamental technology on MEMS.

Vikas Choudhary joined MaxLinear in 2024, responsible for the business strategy and product development for the company’s Connectivity & Storage business. In this role, he will be driving business and product strategies that enable next generation of ethernet (copper) and storage products in data centers, cloud networks, and enterprise storage. His 30+ year career in the semiconductor industry began as an analog/mixed-signal design engineer at ST Microelectronics. From there, he had continued success with companies such as Silicon Systems/Texas Instruments, PMC-Sierra/Microsemi and Analog Devices (ADI). Most recently, Vikas served as Vice President of Marketing, Sales, and Systems at Murata-pSemi, overseeing growth across consumer mobile, connectivity, wireless infrastructure, and high-performance multi-market components. Vikas received his MBA in Marketing & Strategy from Northwestern University, M.S. in Electrical Engineering (MSEE) from UCLA Technology, and BE from the Birla Institute of Technology (BITS) in Ranchi, India. Vikas is also the author of 5 patents, multiple publications, and editor of a book on fundamental technology on MEMS.

Stephen Bates is an AMD Fellow focusing on AI storage architectures and software in the AI Group. He is a renowned expert on topics like NVMe, RDMA, TCP/IP and NVM. He has worked on a range of complex storage and communication systems including NVMe controllers and PCIe switches. He enjoys working at the interface between hardware and software and is an active contributor to the Linux kernel and other open-source software projects. He has spent time as an academic as an Assistant Professor in Computer Engineering at The University of Alberta. He holds a PhD degree from The University of Edinburgh, Scotland and is a Senior Member of the IEEE.

Stephen Bates is an AMD Fellow focusing on AI storage architectures and software in the AI Group. He is a renowned expert on topics like NVMe, RDMA, TCP/IP and NVM. He has worked on a range of complex storage and communication systems including NVMe controllers and PCIe switches. He enjoys working at the interface between hardware and software and is an active contributor to the Linux kernel and other open-source software projects. He has spent time as an academic as an Assistant Professor in Computer Engineering at The University of Alberta. He holds a PhD degree from The University of Edinburgh, Scotland and is a Senior Member of the IEEE.

The data storage market is experiencing enormous growth, driven largely by AI adoption. According to Fortune Business Insights, the global cloud storage market is projected to grow six folds from 100B$ to 600B$+ over next 5 years. This growth is creating significant challenges: • Rising power consumption even beyond current 2% of global energy consumption • Increasing storage costs as data volumes expand • Performance bottlenecks with traditional storage solutions • Security concerns with distributed data This keynote will address these challenges suggesting novel methods using combination of high-performance CPU Cores (performance per watt) and storage acceleration SoC (System-on-a-Chip) drastically reduce the power consumption over traditional methods. Several architectural trade-offs involving off-load, in-line and a hybrid method along with accelerated data services like deep compression for hot and cold data, encrypted data and providing quantum resilience with an achievable scale-out at 1Tb per second will be discussed. These methods can improve effective storage by factors up-to 1:20.

Keynote 10: VergeIO: AI Infrastructure for Everyone: Flattening the Pipeline, Simplifying Deployment

Wednesday, August 6th @ 01:40 PM - 02:10 PM

Yan Ness is an entrepreneur and infrastructure strategist with over 25 years of experience leading technology companies through transformation and scale. As CEO of VergeIO, he guides the company's vision to simplify IT infrastructure and empower organizations to operate sovereign and private clouds with greater efficiency. Yan earned a BS in Computer Science from University of Michigan.

Yan Ness is an entrepreneur and infrastructure strategist with over 25 years of experience leading technology companies through transformation and scale. As CEO of VergeIO, he guides the company's vision to simplify IT infrastructure and empower organizations to operate sovereign and private clouds with greater efficiency. Yan earned a BS in Computer Science from University of Michigan.

Greg Campbell is the architect behind VergeOS and a veteran in scalable system design. With a background in distributed computing and a passion for eliminating infrastructure bottlenecks, he leads VergeIO’s engineering efforts to integrate advanced capabilities like AI into the core of the operating system. Greg earned a degree from University of Michigan - Dearborn.

Greg Campbell is the architect behind VergeOS and a veteran in scalable system design. With a background in distributed computing and a passion for eliminating infrastructure bottlenecks, he leads VergeIO’s engineering efforts to integrate advanced capabilities like AI into the core of the operating system. Greg earned a degree from University of Michigan - Dearborn.

Today, the complexity of artificial intelligence demands specialized skills, sophisticated tools, and robust infrastructure, rendering it both inaccessible and costly for many organizations. IT teams encounter significant learning curves and operational challenges when developing AI solutions on fragmented infrastructures. Current solutions fail to address the core issue: the ecosystem’s overwhelming complexity. Innovations in AI infrastructure must flatten the AI pipeline and reduce integration burdens. This talk will examine how streamlining the AI ecosystem facilitates the privatization of AI for organizations and sovereign entities, enabling the creation of secure, self-managed AI environments. These advancements will promote broader AI adoption, leading to faster returns on GPU investments and justifying the use of high-capacity SSD technology within AI processes. During the keynote, VergeIO will showcase a live demonstration of VergeIQ, and provide a peek at what integrated, sovereign AI looks like in practice.

Keynote 11: KOVE: Rethinking the Box: Why Memory Constraints Are Now a Design Choice

Wednesday, August 6th @ 02:10 PM - 02:40 PM

Dr. John Overton is the CEO and founder of Kove IO, Inc., responsible for introducing the world's first software-defined memory offering, Kove: SDM™. Once considered impossible, Kove: SDM™ delivers infinitely scalable memory, and unleashes new artificial intelligence and machine learning capabilities while also reducing power consumption by up to 54%. In the late 1990s and early 2000s, Dr. Overton co-invented and patented pioneering technology using distributed hash tables for locality management. This breakthrough technology created unlimited scaling, and enabled the advent of cloud storage, scale-out database sharding, among other markets. While at the Open Software Foundation in the late 1980s, Dr. Overton wrote software used by approximately two-thirds of the world's workstation market. Dr. Overton has more than 65 issued patents world-wide, has peer-reviewed publications across a number of academic disciplines, and holds post-graduate and doctoral degrees from Harvard and the University of Chicago.

Dr. John Overton is the CEO and founder of Kove IO, Inc., responsible for introducing the world's first software-defined memory offering, Kove: SDM™. Once considered impossible, Kove: SDM™ delivers infinitely scalable memory, and unleashes new artificial intelligence and machine learning capabilities while also reducing power consumption by up to 54%. In the late 1990s and early 2000s, Dr. Overton co-invented and patented pioneering technology using distributed hash tables for locality management. This breakthrough technology created unlimited scaling, and enabled the advent of cloud storage, scale-out database sharding, among other markets. While at the Open Software Foundation in the late 1980s, Dr. Overton wrote software used by approximately two-thirds of the world's workstation market. Dr. Overton has more than 65 issued patents world-wide, has peer-reviewed publications across a number of academic disciplines, and holds post-graduate and doctoral degrees from Harvard and the University of Chicago.

For decades, compute and storage evolved — but memory stayed in the box. We accepted its limits as fact. In this keynote, Kove CEO John Overton challenges that assumption and shows how software-defined memory (SDM) redefines what’s possible. With Kove: SDM™, memory is no longer constrained by local hardware — it becomes a virtualized, high-performance resource, dynamically allocated where and when it’s needed most. Enterprises are now running memory-bound jobs at up to 60x speed, achieving 100x container density, and reducing power and cooling costs by up to 54%. From AI/ML model training to risk modeling and real-time analytics, Kove: SDM™ unlocks new agility—without code changes or hardware overhauls. Join us to explore how Kove engineered around the limits of physics to rewrite what modern infrastructure can do.

Keynote 12: Where In the World is Tom Coughlin?

Wednesday, August 6th @ 02:40 PM - 03:10 PM

Tom Coughlin, FMS Conference Chair, is President, Coughlin Associates and a digital storage analyst and business/ technology consultant. He has over 40 years in the data storage industry with engineering and senior management positions. Coughlin Associates consults, publishes books and market and technology reports and puts on digital storage and memory-oriented events. He is a regular contributor for forbes.com and M&E organization websites. He is an IEEE Fellow, 2025 IEEE Past President, Past-President IEEE-USA, Past Director IEEE Region 6 and Past Chair Santa Clara Valley IEEE Section, and is also active with SNIA and SMPTE. For more information on Tom Coughlin go to www.tomcoughlin.com.

Tom Coughlin, FMS Conference Chair, is President, Coughlin Associates and a digital storage analyst and business/ technology consultant. He has over 40 years in the data storage industry with engineering and senior management positions. Coughlin Associates consults, publishes books and market and technology reports and puts on digital storage and memory-oriented events. He is a regular contributor for forbes.com and M&E organization websites. He is an IEEE Fellow, 2025 IEEE Past President, Past-President IEEE-USA, Past Director IEEE Region 6 and Past Chair Santa Clara Valley IEEE Section, and is also active with SNIA and SMPTE. For more information on Tom Coughlin go to www.tomcoughlin.com.

Tom Coughlin was elected President-Elect of the IEEE in 2022. In 2023 he was the President Elect and in 2024 the IEEE President. During 2024 he traveled 250 days, visited 26 countries and thirteen states, some more than once. In this presentation he will discuss what our focus was while he was President, some things we accomplished and a few stories from his travels.

Special Presentation: Executive AI Panel: Memory and Storage Scaling for AI Inferencing

Thursday, August 7th @ 11:00 AM - 12:00 PM

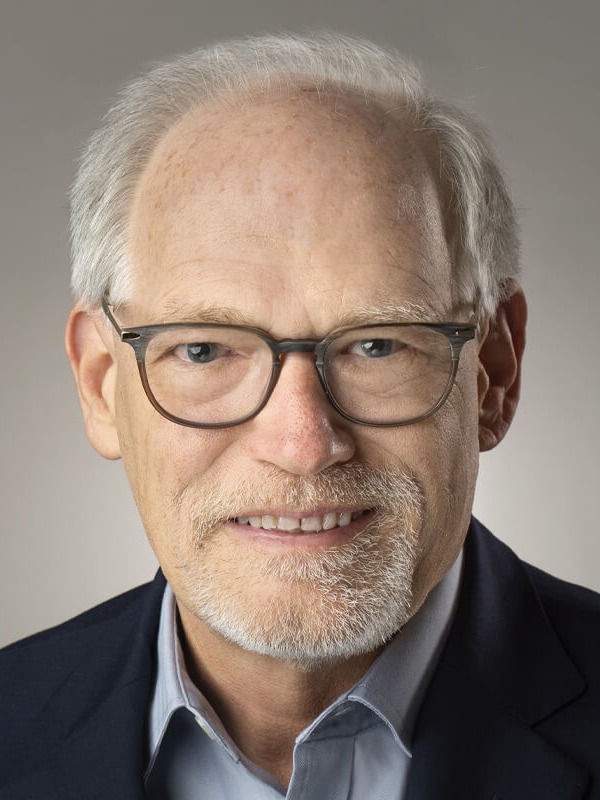

John Kim is director of storage marketing at NVIDIA in the Networking Business Unit, where he helps customers and vendors benefit from high performance networking, SuperNIC offloads, and Remote Direct Memory Access (RDMA), especially for storage, big data, and artificial intelligence. A frequent blogger, conference speaker, and webcast presenter, John was chair of the Storage Networking Industry Association’s Data, Networking and Storage Forum (SNIA DNSF) for three years. After starting his high-tech career as a network administrator, John worked in solution marketing, product management, and alliances for enterprise software companies and then at enterprise storage vendors NetApp and EMC. He joined Mellanox in 2013 and then NVIDIA in 2020.

John Kim is director of storage marketing at NVIDIA in the Networking Business Unit, where he helps customers and vendors benefit from high performance networking, SuperNIC offloads, and Remote Direct Memory Access (RDMA), especially for storage, big data, and artificial intelligence. A frequent blogger, conference speaker, and webcast presenter, John was chair of the Storage Networking Industry Association’s Data, Networking and Storage Forum (SNIA DNSF) for three years. After starting his high-tech career as a network administrator, John worked in solution marketing, product management, and alliances for enterprise software companies and then at enterprise storage vendors NetApp and EMC. He joined Mellanox in 2013 and then NVIDIA in 2020.

Rory Bolt is a senior fellow at KIOXIA America and leads the forward-looking technology and storage pathfinding group for SSDs. He has more than twenty-five years of experience in data storage systems, data protection systems, and high-performance computing with a pedigree from marquee storage companies. Rory has been granted over 12 storage related patents and has several pending. Rory has a BS in Computer Engineering from UCSD.

Rory Bolt is a senior fellow at KIOXIA America and leads the forward-looking technology and storage pathfinding group for SSDs. He has more than twenty-five years of experience in data storage systems, data protection systems, and high-performance computing with a pedigree from marquee storage companies. Rory has been granted over 12 storage related patents and has several pending. Rory has a BS in Computer Engineering from UCSD.

Vincent (Yu-Cheng) Hsu is an IBM Fellow, CTO, and VP of IBM Storage. His responsibilities include IBM storage technical strategy, future storage technology research, storage system architecture, design, and solutions development. He is leading IBM storage’s strategic initiatives to support IBM hybrid clouds, Data and AI and cyber security. Vince has devoted his entire 30+ years of his career on storage system research and development. He is a master inventor at IBM. In 2005 he was named a Distinguished Engineer and Chief Engineer for IBM Enterprise storage. In 2009, he was named the CTO for IBM disk storage leading IBM storage technology council to oversee storage technology for all IBM disk storage products. He was named an IBM Fellow in 2015. Since 2023, Mr. Hsu represents IBM in Ceph community governance board. He is appointed as an IBM TT (Technology team) member in 2024. In 2025, Vincent led IBM storage to deliver the very first content aware storage solution to accelerate AI data processing and inferencing. Vincent is a graduate of the University of Arizona and holds a Master of Science degree in Computer Engineer and an MBA from Eller college of the University of Arizona.

Vincent (Yu-Cheng) Hsu is an IBM Fellow, CTO, and VP of IBM Storage. His responsibilities include IBM storage technical strategy, future storage technology research, storage system architecture, design, and solutions development. He is leading IBM storage’s strategic initiatives to support IBM hybrid clouds, Data and AI and cyber security. Vince has devoted his entire 30+ years of his career on storage system research and development. He is a master inventor at IBM. In 2005 he was named a Distinguished Engineer and Chief Engineer for IBM Enterprise storage. In 2009, he was named the CTO for IBM disk storage leading IBM storage technology council to oversee storage technology for all IBM disk storage products. He was named an IBM Fellow in 2015. Since 2023, Mr. Hsu represents IBM in Ceph community governance board. He is appointed as an IBM TT (Technology team) member in 2024. In 2025, Vincent led IBM storage to deliver the very first content aware storage solution to accelerate AI data processing and inferencing. Vincent is a graduate of the University of Arizona and holds a Master of Science degree in Computer Engineer and an MBA from Eller college of the University of Arizona.

A technology executive and leader with over 20-years of cross-functional experience in early and growth stage technology companies, John is currently the VP of global business development and strategic alliances at VAST Data, focused on enabling AI, data analytics, and cloud use-cases for large data organizations. Prior to joining VAST, John led product management and product strategy teams across a wide range of technology verticals including cloud infrastructure, network and application performance management, custom hardware (silicon), big data / analytics, storage and hyper-converged infrastructure. Starting his career as a database engineer provided an onramp into the world of unlocking meaningful insight from large datasets, a passion he still possesses today. John holds a B.S. in Computer Science from the University of Texas.

A technology executive and leader with over 20-years of cross-functional experience in early and growth stage technology companies, John is currently the VP of global business development and strategic alliances at VAST Data, focused on enabling AI, data analytics, and cloud use-cases for large data organizations. Prior to joining VAST, John led product management and product strategy teams across a wide range of technology verticals including cloud infrastructure, network and application performance management, custom hardware (silicon), big data / analytics, storage and hyper-converged infrastructure. Starting his career as a database engineer provided an onramp into the world of unlocking meaningful insight from large datasets, a passion he still possesses today. John holds a B.S. in Computer Science from the University of Texas.

With over 30 years of experience at SK hynix, Sunny Kang has successfully led numerous high-impact initiatives as a seasoned expert in DRAM Planning and New Product Enabling. His role as a JEDEC representative, contributing to memory standardization and ecosystem development, has been a cornerstone of his professional journey. Since relocating to SK Hynix America in 2022, he has been leading the DRAM Technology teams, overseeing future pathfinding and roadmap alignment with key customers like NVIDIA. His responsibilities also include New Product Enabling, and customer technical support related to mass production quality. A critical aspect of his role is ensuring our technology roadmaps are closely aligned with all our customer needs, enabling seamless integration and optimal performance.

With over 30 years of experience at SK hynix, Sunny Kang has successfully led numerous high-impact initiatives as a seasoned expert in DRAM Planning and New Product Enabling. His role as a JEDEC representative, contributing to memory standardization and ecosystem development, has been a cornerstone of his professional journey. Since relocating to SK Hynix America in 2022, he has been leading the DRAM Technology teams, overseeing future pathfinding and roadmap alignment with key customers like NVIDIA. His responsibilities also include New Product Enabling, and customer technical support related to mass production quality. A critical aspect of his role is ensuring our technology roadmaps are closely aligned with all our customer needs, enabling seamless integration and optimal performance.

Raw bandwidth is important for AI training workloads, but AI inference needs that and more. It also needs distributed solutions with AI optimized low latency networking, and intelligent memory and storage for optimum performance. This panel explores how ultra-high performance AI optimized storage networking, and GPU enhanced AI storage solutions can dramatically accelerate data transfers between memory and local and remote storage tiers. This enables dynamic resource allocation, significantly boosting AI inferencing request throughput. We will explore how this combination addresses the challenges of scaling inference workloads across large GPU fleets moving beyond traditional bottlenecks. We have assembled a panel of experts from inside NVIDIA and across the storage and memory industry to provide insight on how to maximize the number of AI requests served, while maintaining low latency and high accuracy.